Autonomous Driving

Autonomous driving

Autonomous driving is a pivotal research area within the fields of robotics, computer vision, and artificial intelligence, focused on developing vehicles capable of navigating and operating without human intervention. This technology is essential for applications such as personal transportation, logistics, and public transit, where it promises to enhance safety, reduce traffic congestion, and improve overall efficiency. The primary objective of autonomous driving is to enable vehicles to perceive their environment, make informed decisions, and execute driving tasks in real-time, all while adhering to traffic regulations and ensuring passenger safety. This field faces significant challenges, including the complexity of real-world driving scenarios, the need for robust perception systems to interpret diverse environmental conditions, and the requirement for reliable decision-making algorithms that can handle unexpected situations. Consequently, researchers in this domain often employ a combination of supervised learning, reinforcement learning, and simulation-based training to develop and validate autonomous driving systems, while also exploring approaches to ensure safety and reliability in various driving contexts.

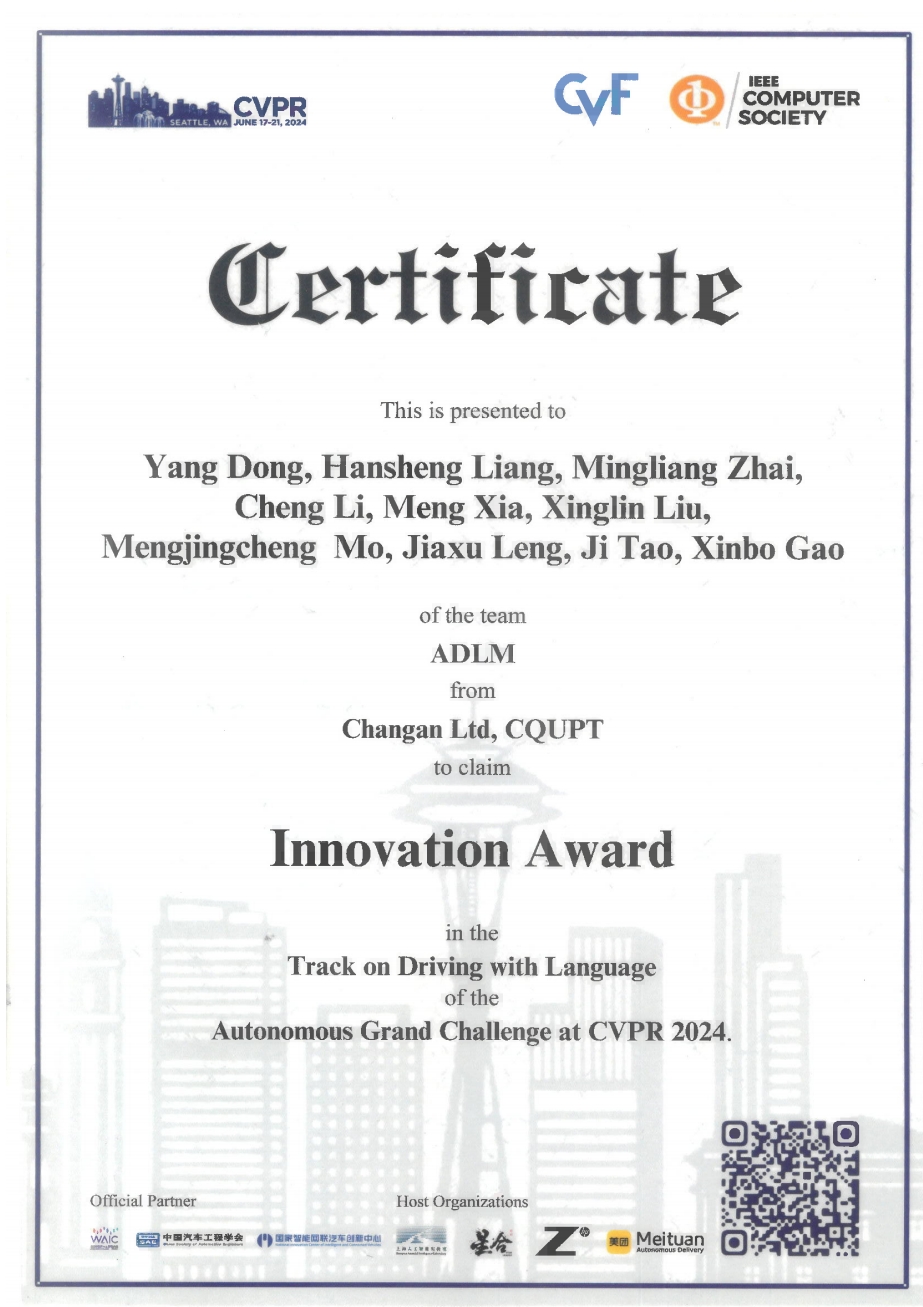

CVPR 2024 Innovation Award: Driving with Language

Our team won the sole Innovation Award in the "Driving with Language" track at the CVPR 2024 Autonomous Grand Challenge. Competing against 152 teams from 14 countries and regions, we proposed an innovative solution addressing LLM's weaknesses in perception and localization for autonomous driving scenarios. By introducing Bird's Eye View (BEV) features, we achieved effective alignment between LLM and multi-view visual data, and innovatively proposed a Graph of Thoughts (GoT) approach that significantly enhanced the model's understanding of driving scene contexts. This solution successfully integrated LLM's reasoning capabilities with spatial perception abilities, providing stronger explainability for autonomous driving decisions and pioneering new directions for large language model applications in the autonomous driving domain.

Ipsum ipsum clita erat amet dolor justo diam

ECCV 2024 Autonomous Driving Challenge : Corner Case Perception and Understanding

Our team won third place in the "Corner Case Scene Understanding" track at the ECCV 2024 Autonomous Driving Challenge. We presented our solution NexusAD, built on InternVL-2.0, which integrates depth estimation, chain-of-thought reasoning, and retrieval-augmented generation techniques. Our approach achieved a score of 68.97, outperforming GPT-4V across three key metrics. The competition, organized by Dalian University of Technology, HKUST, and CUHK, attracted participants from leading institutions worldwide.

Ipsum ipsum clita erat amet dolor justo diam

Weixin Applet

Ipsum ipsum clita erat amet dolor justo diam

Chicken Burger $115

Ipsum ipsum clita erat amet dolor justo diam