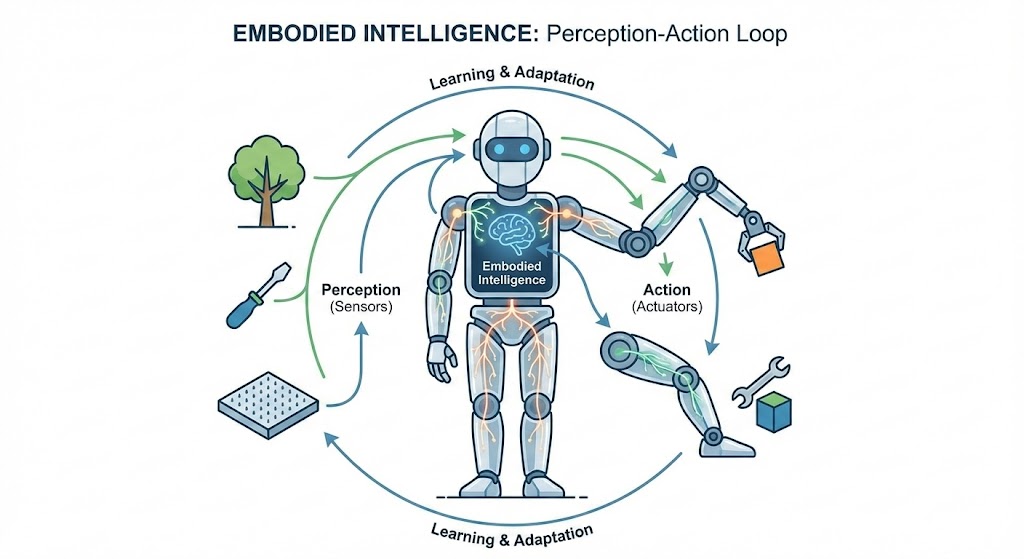

Embodied Intelligence

EI Diagram

Task Description

Embodied intelligence is an important research area in robotics, artificial intelligence, and cognitive science, dedicated to developing intelligent systems that achieve cognition and decision-making through interactions between the body and the environment. This concept is crucial in applications such as humanoid robots and autonomous systems, aiming to enhance adaptability, robustness, and operational capability in complex environments. The core goal of embodied intelligence is to enable systems to perceive their surroundings, learn from interactions, and perform tasks efficiently through the synergy between physical embodiment and computation.This field faces numerous challenges, such as modeling the dynamic relationship between the body and the environment, achieving real-time integration of perception and action, and developing learning algorithms capable of adapting to diverse scenarios. Researchers often employ methods like reinforcement learning, bio-inspired design, and simulation testing to ensure that embodied intelligence systems are safe, efficient, and context-aware in practical applications.

Selected Papers

Exceptional Contribution

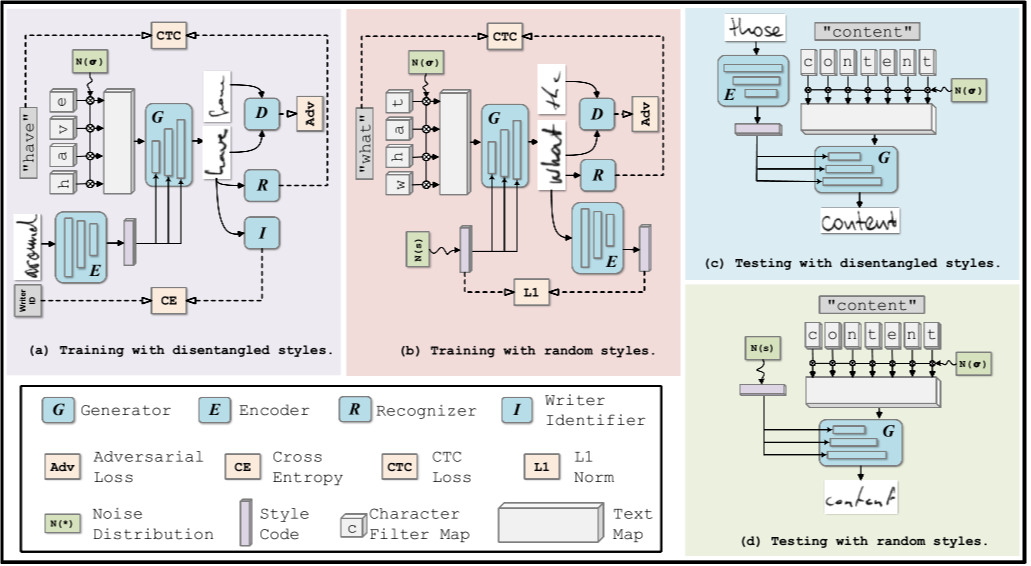

HiGAN: Handwriting Imitation Conditioned on Arbitrary-Length Texts and

Disentangled Styles

Ji Gan, Weiqiang Wang*

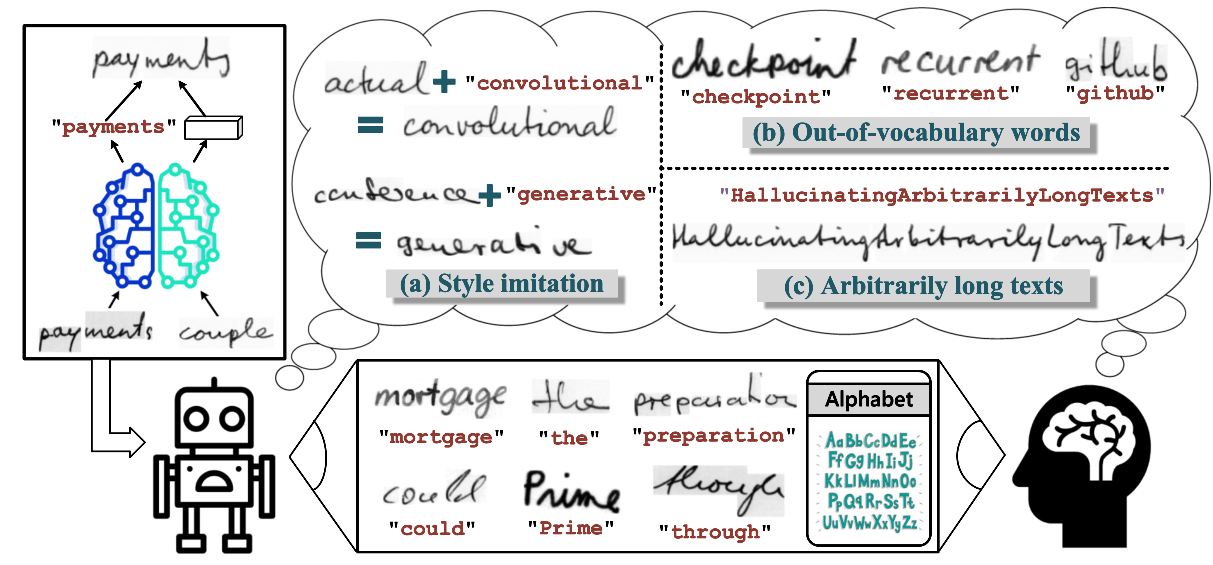

Given limited handwriting scripts, humans can easily visualize (or imagine) what the handwritten words/texts would look like with other arbitrary textual contents. Moreover, a person also is able to imitate the handwriting styles of provided reference samples. Humans can do such hallucinations, perhaps because they can learn to disentangle the calligraphic styles and textual contents from given handwriting scripts. However, computers cannot study to do such flexible handwriting imitation with existing techniques.we propose a novel handwriting imitation generative adversarial network (HiGAN) to mimic such hallucinations. Specifically, HiGAN can generate variable-length handwritten words/texts conditioned on arbitrary textual contents, which are unconstrained to any predefined corpus or out-of-vocabulary words. Moreover, HiGAN can flexibly control the handwriting styles of syntheticimagesbydisentanglingcalligraphicstylesfromthe reference samples.

AAAI Conference on Artificial Intelligence,2021

HiGAN+: Handwriting Imitation GAN with Disentangled

Representations

Ji Gan, Weiqiang Wang, Jiaxu Leng and Xinbo Gao

Humans remain far better than machines at learning, where humans require fewer examples to learn new concepts and can use those concepts in richer ways. Take handwriting as an example, after learning from very limited handwriting scripts, a person can easily imagine what the hand-written texts would like with other arbitrary textual contents (even for unseen words or texts). Moreover, humans can also hallucinate to imitate calligraphic styles from just a single reference handwriting sample (that even have never seen before). Humans can do such hallucinations, perhaps because they can learn to disentangle the textual contents and calligraphic styles from handwriting images. Inspired by this, we propose a novel handwriting imitation generative adversarial network (HiGAN+) for realistic handwritten text synthesis based on disentangled representations. The proposed HiGAN+ can achieve a precise one-shot handwriting style transfer by introducing the writer-specific auxiliary loss and contextual loss, and it also attains a good global & local consistency by refining local details of synthetic handwriting images.

AAAI Conference on Artificial Intelligence,2021